Mapping Artificial Intelligence by Exploring Possibility Spaces

How casual creators can quickly map dimensions and find superlatives in generative models like GPT-3 and BigGAN

How intelligent are deep generative models—the kinds of models that fill in missing pixels in images, produce photo-realistic images of people, and generate stories that are often indistinguishable from what a human might have written? Perhaps unsurprisingly, intelligence is complex and tricky to measure. Rather than trying to evaluate intelligence, which is often confused with pattern matching, I want to focus on brilliance. How often and in what contexts does a deep generative model go beyond human-level performance? Back in 2016 in the second game of the historic AlphaGo vs. Lee Sedol Go match, Fan Hui, a professional Go player, remarked on move 37, “it's not a human move. I've never seen a human play this move… So beautiful. Beautiful. Beautiful. Beautiful.”

Last week, Open AI launched a private beta API for GPT-3 – a neural network model for text generation. Depending on your perspective, the first applications seem like an instance of either alien-play magic or rote memorization.

In this essay, I’ll explain how the casual creator framework for rapidly mapping a generative model’s possibility space can be applied to generative neural network models to surface superlative artifacts and generate a mental map of how an AI behaves. We’ll consider a case study from Meet the Ganimals and consider how this could be applied to GPT-3. But first, let’s examine a couple motivating examples of what some may consider superlative artifacts. I want to hone in on the difference between brilliance and a cheap resemblance to the real thing. These examples include a how-to book on writing a bestseller, front-end scripting, the transformation of plain english into equations, a knowledge macro into Excel, and answers to the meaning of life.

Brilliance or Simulacra?

The following example comes from the German artist, Mario Klingemann, who used GPT-3 to produce a two-page story on how to write a bestseller in a week. It’s grammatically correct, conceptually coherent, and stylistically spot-on satire of a self-help guru. My favorite line is the have-it-all contradiction: “I can also tell you exactly how to sell a bestseller without publishing that many copies.” This story isn’t the most informative or cohesive, but Klingemann’s application of GPT-3 is a near-perfect satire of online self-help writing.

The next example application got the most attention on Twitter. Here, Sharif Shameem – the founder of the website debuild.co that lets you build web apps just by describing what they should do – shows how GPT-3 can generate JSX code (HTML syntax for React) from simple English. The gripping idea here is that you could create code without knowing how to code. One thing to keep in mind is that this text generation model (and its many fine-tuned versions) have no formal understanding of logic. In other words, GPT-3 is performing an impressive feat of pattern-matching. The reality of front-end scripting is the patterns are much more obvious than non-developers might think.

There are many standardized approaches for evaluating the performance of text generation models. In the paper presenting GPT-3, Language Models are Few-Shot Learners, GPT-3 is evaluated on a wide-range of natural language processing benchmark tasks, which include closed book question answering, finding the best ending to a story, translation, figuring out which word a pronoun refers to in grammatically ambiguous cases, common sense reasoning, arithmetic, and a series of other tasks. The long story, short is a minimally fine-tuned GPT-3 model does really well on most of these tasks. In an attempt to showcase GPT-3’s rote memorization, I’ll highlight GPT-3’s performance on simple arithmetic with a figure from the paper.

On the X axis is the number of parameters in the text generation model, and on the Y axis is the accuracy on the arithmetic task. Let’s focus on the accuracy scores for GPT-3, which is the model with 175 billion parameters on the far right. What you see is that GPT-3 is near perfect in two digit addition and subtraction, but it’s only 80% accurate on three digit addition (e.g. 120+201) and it’s only 25% accurate on four digit addition (e.g. 1543+8008). Computers are really good at arithmetic, so what is going on here? GPT-3 is revealing its ability to parrot what it has seen and its inability to “think” critically. The fact is that GPT-3 has been trained on snapshots of the common crawl—the text of nearly every publicly available website on the Internet. It’s reasonable to assume the model has seen the answer to nearly all two digit addition problems. After all, there are only 8100 possible two digit addition problems and over 3 billion webpages in the common crawl. It’s impressive that the model can perform pattern matching for four digit addition at all, but the text generation model clearly doesn’t “understand” arithmetic. (Update 3/8/2021: And, a recent paper goes into more detail on how this model is quite poor at solving math questions).

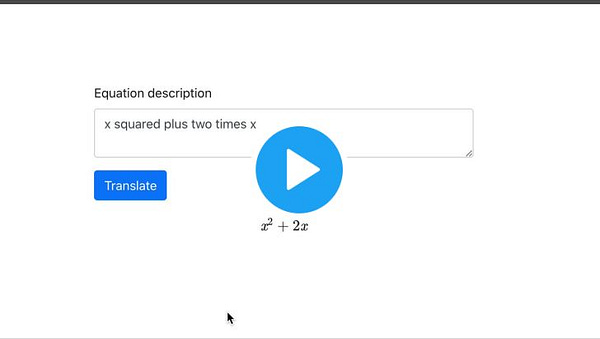

Speaking of math, there’s another GPT-3 example by Shreya Shankar that shows a pretty neat transformation of plain English to formal mathematical equations. As Shreya points out though, the transformation is amusingly brittle. When she replaces the = symbol in the equation description to the word “equals” the equation changes.

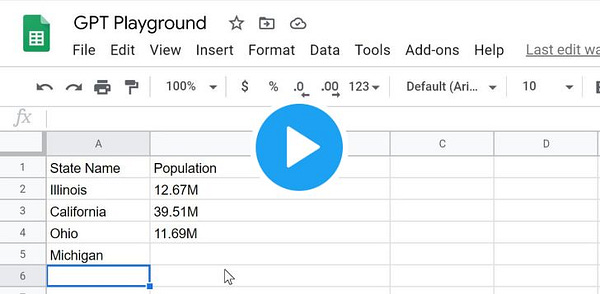

And for those who do not like Greek formulas or code, another example by Paul Katsen shows the ultimate spreadsheet function for filling in missing data like population sizes and founding dates of US states. This looks quite impressive until we double check the data’s accuracy and discover the numbers are mostly wrong. What is peculiar (and makes sense with the pattern matching paradigm) is that the numbers are not absurdly wrong but just slightly wrong.

Despite its brittleness and inaccuracies, some might wonder what GPT-3 has to say about the meaning of life? Below is a conversation between a human and an AI produced by Sid Bharath. The conversation may be somewhat inspiring, but it’s clearly a simulation pattern matching on what has been said before. There’s nothing new, and the model clearly has no sense of humor. Instead of brilliance, the GPT-3 responses are banal and arguably pseudo-profound bullshit, which psychologists define as “seemingly impressive assertions that are presented as true and meaningful but are actually vacuous.”

Here’s another approach by Paras Chopra asking what famous people in history would answer is the meaning of life. Now, these statements are impressive in how plausible they sound, but I’m skeptical that these people would agree with the GPT-3 generated statements.

Given that GPT-3 is trained on what is written on the Internet, we should expect that the next version of the text generation model will likely include this essay that you are currently reading and the linked tweets. Maybe, a healthy dose of critical writing will inspire a future AI to develop a sense of humor and suggest 42 as the answer to life, the universe, and everything. A future model that can explain its epistemological inspiration would be pretty impressive. But, given that GPT-3 is a pattern matching algorithm, we should keep in mind it lacks a lived experience. So, rather than seek out a mindless parroting of the meaning of life, Grady Brooch suggested a quote from Joseph Campbell that perhaps best answers the question “What is the meaning of life?” with a reframing:

To recap, there’s certainly an impressiveness in GPT-3’s ability to translate plain English to front-end code and mathematical formulas. But these translations are brittle and the Excel macro for missing knowledge is more of an idea than a reality. Likewise, the meaning of life answers are simulacra, unsatisfactory imitations that sound deceptively good but miss the point. From what I have seen, the most brilliant artifact is Klingemann’s “The Manual—Or: How to Write a Bestseller with the Aid of AI in a Week,” which is genius for how it satires the dark-side of the self-help industry for its lack of originality. This genius comes out of Klingemann’s aesthetics and sense for where the technology is most provocative. GPT-3 can be a useful tool but we have yet to find particular brilliance just yet, so let’s return to the motivating question: how do we rapidly map GPT-3’s possibility space and discover potential displays of beyond human brilliance?

Mapping Brilliance with Casual Creators

Casual creators are a genre of systems explained in Kate Compton’s PhD thesis that are driven by the human pursuit of creativity for its own sake and produce artifacts within a limited-yet-meaningful domain space. Conceptually, casual creators are a useful framework for understanding how interactive and generative technology combine with human creativity. These systems allow and encourage anyone regardless of technical training to explore the possibility space of a generative model. The human labor for these systems are relatively inexpensive because people tend to explore and surface artifacts from casual creators based on their own curiosity and the sense of pride for producing these artifacts.

A casual creator on top of GPT-3 could look like a simple text interface connected to the GPT-3 API, which allows a user to (a) enter a series of patterns to fine-tune the model then (b) seed an input to produce an artifact. Such an interface would not require the user to have any technical software or machine learning skills. Any of the previously discussed anecdotes could be built using this format. And moreover, this interface could allow anybody to explore new patterns and produce potentially compelling creations like synthetic haikus, greeting cards, recipes, stand-up comedy routines, motivational speeches, legal arguments and many other possibilities.

The predecessor of GPT-3, GPT-2, was good at pattern matching and problematically prone to racism and sexism as a result of this pattern matching. The GPT-3 paper make it clear that the model is even better at pattern matching and still problematically biased. Now, the interesting question is where are the moments of beauty and brilliance in GPT-3 that resemble the alien-play by AlphaGo. That’s what casual creators can be uniquely positioned to help discover.

A large group of digital explorers and citizen scientists can quickly expose interesting dimensions and superlative aspects of a neural network generative model. As an example, Meet the Ganimals, a casual creator designed to rapidly explore the possibility space of BigGAN (generative neural network model for producing synthetic, realistic images of objects), can provide a case study on how we might discover brilliance in text generation models like GPT-3.

Meet the Ganimals is an interactive website that allows users to generate images of hybrid animals (called ganimals) and breed them to create more hybrid animals. In just a couple months, thousands of users visited Meet the Ganimals and generated tens of thousands of Ganimals. Based on what Ganimals people chose to combine and people’s ratings of these ganimals, we discovered trends in how morphological features are related to aesthetic preferences. For example, hybrid animals containing a dog tend to be seen as cuter than hybrid animals that don’t. Likewise, insects make hybrid animals seem creepier. We also discovered the Golden Foofa, which is a combination of a goldfish and golden retriever. You can find the Golden Foofa in the image below in the middle of the top row.

If you take a look at the list of hybrid animals in folklore or Jorge Luis Borge’s Book of Imaginary Beings, you’ll notice that past myths never considered a goldfish-Golden Retriever hybrid. (Or at least, I haven’t found such a combination yet). But, if you’re willing to open your mind to the odd possibility of the Golden Foofa, you might find the ganimal pretty cute pet and a potentially awesome character in a future work of fiction.

By building a casual creator that allows people to explore the latent space between the originally trained upon categories, a striking Golden Foofa quickly emerged from the sea of statistical averages. In a recent pre-print co-authored by Zivvy Epstein, Océane Boulais, Skylar Gordon and me, we describe Meet the Ganimals as “an example how casual creators can leverage human curation and citizen science to discover novel artifacts within a large possibility space.” This research was inspired by a curiosity around the aesthetic, emotional, and morphological dimensions that people perceive in never-before-seen images of hybrid animals produced by a generative model. Moreover, this research emerged from a desire to answer these aesthetic questions about hybrid animals without enumerating the entire possibility space, which mostly contains less interesting creations.

The Golden Foofa is different than the alien-play move by AlphaGo, which is also different than Klingemann’s satire, but each of these examples speaks to a dimension of beauty hidden within a model. If I were to guess where another dimension of beauty might be lurking in GPT-3, I would explore pattern matching for poetry. I would try to fine-tune the model to translate a paragraph of text into iambic pentameter (or other poetic frameworks like a rhyme scheme or set of alliteration) while preserving the original meaning of the text. These beautiful artifacts of generative models arise from an open-ended exploration driven by curiosity. In the process of mapping brilliance, we begin to develop a mental map of the generative model’s synthetic abilities.

The N-Dimensional Mental Map

A standard, visual, two-dimensional map of GPT-3’s problem-solving abilities or BigGAN’s depictions of never-before-seen hybrid animals would fail to capture the high dimensional space within which brilliance lurks. Instead of visualizing a map of a wildly expansive possibility space, casual creators can help us form mental maps for how artificial intelligence behaves. We have already seen instances of the impressiveness, banalities, and problems of extreme pattern matching in AI systems, but what we’re really curious about is where the edge cases of brilliance reside. By searching for those, we also develop a sense for what does not work. Meet the Ganimals offers a case study in exposing novel artifacts in BigGAN, and this paradigm can be applied to GPT-3 and future AI systems. Be careful though, this exploration has an effect on us too. As Ralph Waldo Emerson once wrote, “the mind, once stretched by a new idea, never returns to its original dimensions.”

Thanks to Zivvy Epstein, Océane Boulais, and Pat Pataranutaporn for reviewing drafts of this.

If you found this essay intriguing, click “Subscribe now” to join the Probalosophy mailing list.

And, now for your moment of zen. Here is Océane Boulais as Tara Darwin guiding us through the fictional world of Ganimals.